Books of Interest

Website: chetyarbrough.blog

Capitalism (A Global History)

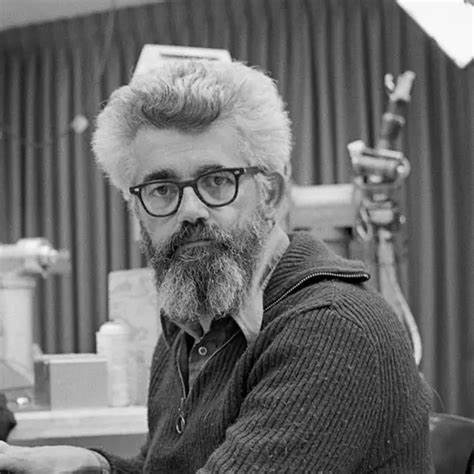

Author: Sven Beckert

Narration by: Soneela Nankani & 3 more

Sven Beckert (Author, Professor of History at Harvard, graduated from Columbia with a PhD in History.)

Professor Beckert defines capitalism as an economic form of privately owned capital reinvested in an effort to produce more capital. In defining capitalism in that way, Beckert suggests capitalism reaches back to 1000 CE, long before some who argue it came into being in 18th century England. Beckert argues the Italian city-states, like Venice, Genoa, and Florence, are the origin of capitalism. That is when accumulated wealth is invested in long-distance trade networks, early banks, and trade by wealthy Italian families. Beckert’s point is that England simply expanded what had begun hundreds of years earlier with trade investment by wealthy Italian families.

Economic theories.

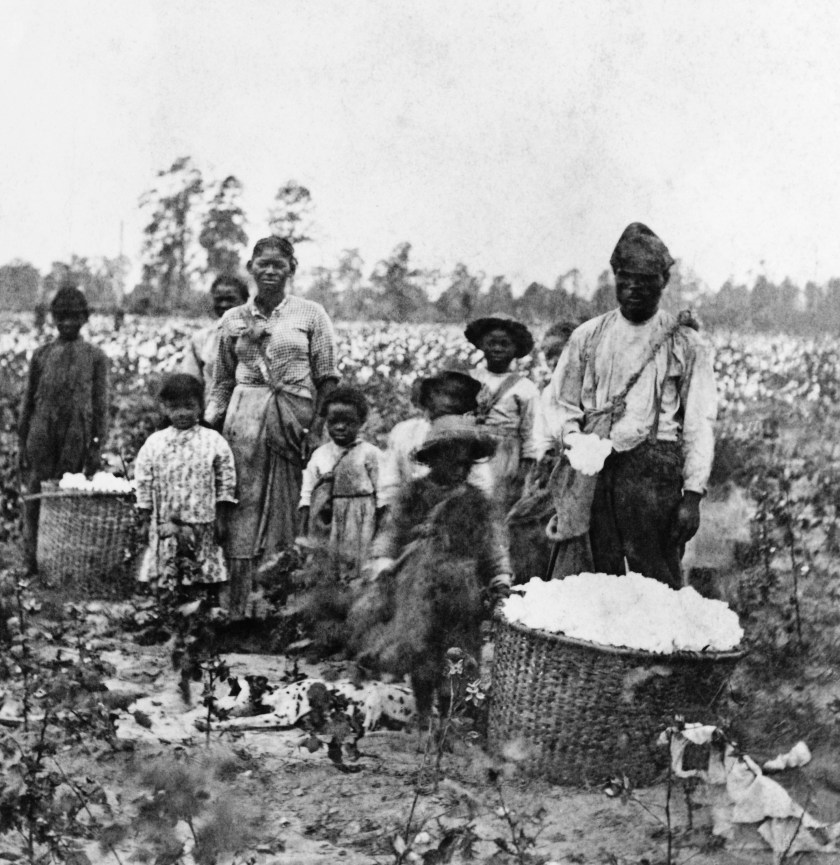

Becker briefly compares many economic theories like capitalism, Marxism, Keynesianism, and Polanyian theories which he calls institutional economics. All bare the flaws of human nature. His economic history is about the addition of slavery to capitalism in the late 15th through 18th centuries. Beckert notes Portugal, Spain, Britain, France, and the Netherlands strengthened their capitalist economies. They were able to secure cheap, controllable labor, expand production, and increase profits with slavery.

Beckert explains the monumental changes and expansion that occurs with England’s adoption of early capitalism. As early as the 17th century, Beckert notes England revolutionizes capitalism in good and morally corrupt ways. Nation-state power combines with private capital to create a massive capitalist influencer around the world. With the dominance of British naval power, colonialism expands, slavery becomes part of international trade, and capitalist monopolies grow to dominate economies. England’s industrial revolution with mechanized production, factory labor, and capital accumulation is able to expand market influence and hugely improve their countries infrastructure and legal protections. Creating patent laws raises potential for monopolization of some market goods.

For several reasons, slavery declines during the later years of industrialization. However, Beckert notes its immorality is not the primary reason.

Free labor became more efficient for capital accumulation. The enslaved became discontented with their role as cheap labor. By the 19th century, slavery became politically and legally incompatible with capitalism. Capitalists began to understand how they could gain more wealth by indenturing rather than enslaving workers, offering sharecropping, or leasing convicts. Capitalists found they could get cheaper labor through contracts with prisons, or sharing of income than slave ownership by being more flexible with the political and physical environment in which labor worked. Slavery faded because capitalists found new ways to reduce costs of labor. At the same time, slave revolts were escalating, the U.S. Civil War is being fought, policing of slavery became too expensive, and investors felt their investments would be at risk in company’s dependent on slave labor. Morality had little to do with abolishing slavery in Beckert’s opinion.

Beckert shows how capitalism systematically expands investment of private capital. Capital is put to work rather than hoarded and consumed by a singular family, political entity, or economic system. Capitalism provides a potential for moving beyond slave-based economies, though racial discrimination remains a work in progress. Beckert notes capitalism is different from other economic systems because it invests private capital that theoretically moderates the need for nation-state’ capital investment in the health, and welfare of a nation’s citizens.

The interesting judgement made by Beckert is that capitalism’s foundation was initially based on slavery, colonialism, and state violence.

The violence of which he writes is based on several factors, i.e., historical slavery, territorial seizure, nation-backed monopolies, worker mistreatment or suppression, and global coercion with military backing. Beckert seems to admit no major historical economic system is free of violence. It seems every economic system is imperfect. Violence appears a fundamental part of human nature in all presently known economic systems.

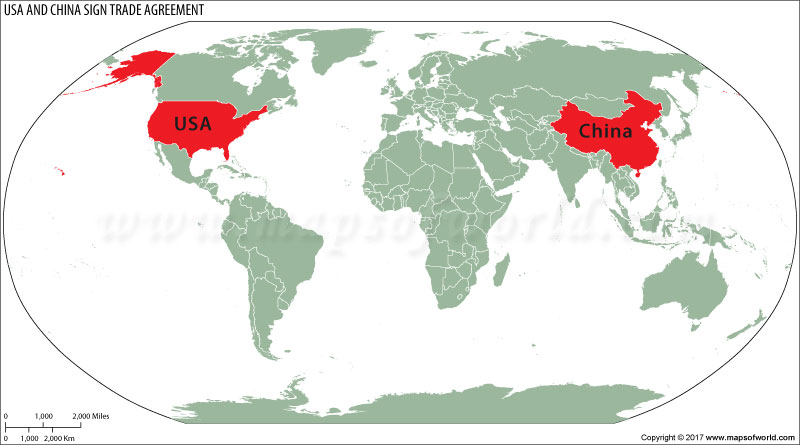

In the mid to late twentieth century, Beckert notes how manufacturing becomes a global rather than local capitalist activity.

This reorganization creates global inequalities that America is late to understand and adjust to in their capitalist economy. The financial and investment industry of America benefited by becoming world investors, but the local economy fails to remain competitive with the production capabilities of other countries. To become competitive seems an unreasonable expectation for America because of the cost of labor. Trump’s belief appears to be that the solution is to force a return of manufacturing to America. To do that, the rich seem to ignore the fact that to be competitive manufacturing has to have its costs reduced. Where will that reduction come from? Reducing labor costs creates a downward spiral in the families dependent on income from labor. Can America capture a larger part of raw materials for manufacturing to offset higher costs of labor? That is conceivable but it will require a more focused American investment in raw materials that other nations are equally interested in capturing.

AI is a tool of human beings and will be misused by some leaders in the same way atom bombs, starvation, disease, climate, and other maladies have harmed the sentient world.

A capitalist’ economy’s violence has multiple drivers but A.I. has the potential of early detection of conflict hotspots, better predictive policing, more efficient allocation of material resources, and improved mental-health triage and intervention. A.I. is not a perfect answer to human nature’s flaws or the reestablishment of manufacturing in America. There is the downside of the surveillance society pictured by George Orwell.

A surveillance society is a choice that can be made with careful deliberation or by helter-skelter judgement to return manufacturing to America without clearly understanding its impact on American society. That is the underlying importance of Beckert’s history of capitalism.