Books of Interest

Website: chetyarbrough.blog

BURN-IN (A Novel of the Real Robotic Revolution)

Author: P. W. Singer, August Cole

Narration by: Mia Barron

Peter Warren Singer (on the left) is an American political scientist who is described by the WSJ as “the premier futurist in the national security environment”. August Cole is a co-author who is also a futurist and regular speaker before US and allied government audiences.

As an interested person in Artificial Intelligence, I started, stopped, and started again to listen to “Burn-In”.

The subject of the book is about human adaptation to robotics and A.I. It shows how humans, institutions, and societies may be able to better serve society on the one hand and destroy it on the other. Some chapters were discouraging and boring to this listener because of tedious explanations of robot use in the future. The initial test is in the FBI, an interesting choice in view of the FBI’s history which has been rightfully criticized but also acclaimed by American society.

Starting, stopping, and restarting is a result of the author’s unnecessary diversion to a virtual reality game being played by inconsequential characters.

In an early chapter several gamers are engaged in VR that distracts listeners from the theme of the book. It is an unnecessary distraction from the subject of Artificial Intelligence. Later chapters suffer the same defect. However, there are some surprising revelations about A.I.’s future.

The danger in societies future remains in the power of knowledge. The authors note the truth is that A.I.’s lack of knowledge is what has really become power. Presumably, that means technology needs to be controlled by algorithms created by humans that limit knowledge of A.I.’ systems that may harm society.

That integration has massive implications for military, industrial, economic, and societal roles of human beings. The principles of human work, social relations, capitalist/socialist economies and their governance are changed by the advance of machine learning based on Artificial Intelligence. Machine learning may cross thresholds between safety and freedom to become systems of control with potential for human societies destruction. At one extreme is China’s surveillance state; on the other is western societies belief in relative privacy.

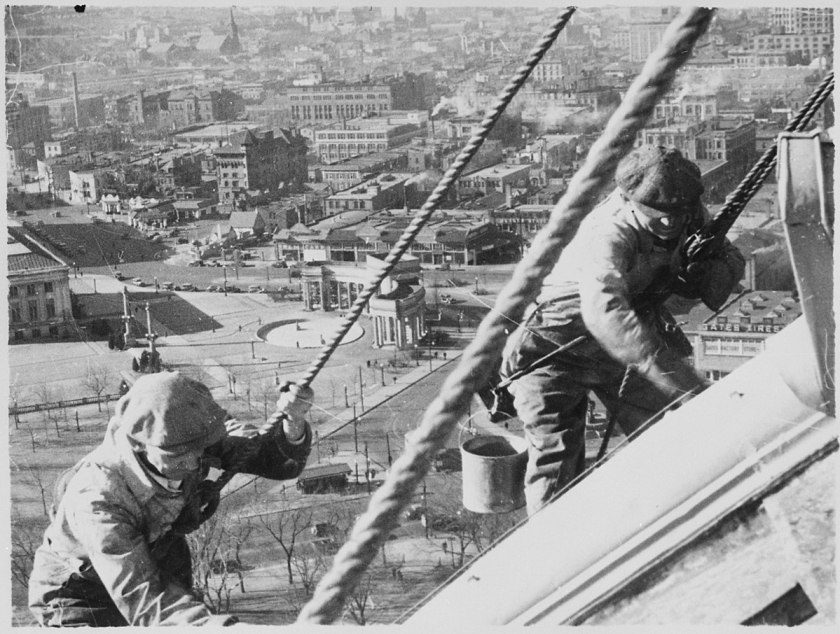

Robot evolution.

Questions of accountability become blurred when self-learning machines gain understanding beyond human capabilities. Do humans choose to trust their instincts or a machines’ more comprehensive understanding of facts? Who adapts to whom in the age of Artificial Intelligence? These are the questions raised by the authors’ story.

The main character of Singer’s and Cole’s story is Lara Keegan, a female FBI agent. She is a seasoned investigator with an assigned “state of the art” police robot. The relationship between human beings and A.I. robots is explored. What trust can a human have of a robotic partner? What control is exercised by a human partner of an A.I.’ robot? What autonomy does the robot have that is assigned to a human partner? Human and robot partnership in policing society are explored in “Burn-In”. The judgement of the author’s story is nuanced.

In “Burn-In” a flood threatens Washington D.C., the city in which Keegan and the robot work.

The Robot’s aid to Keegan saves the life of a woman threatened by the flood as water fills an underground subway. Keegan hears the woman calling for help and asks the robot to rescue the frightened woman. The robot submerges itself in the subway’ flood waters, saves the woman and returns to receive direction from Keegan to begin building a barrier to protect other citizens near the capitol. The Robot moves heavy sacks filled with sand and dirt, with surrounding citizens help in loading more sacks. The robot tirelessly builds the barrier with strength and efficiency that could not have been accomplished by the people alone. The obvious point being the cooperation of robot and human benefits society.

The other side of that positive assessment is that a robot cannot be held responsible for work that may inadvertently harm humans.

Whatever human is assigned an A.I robot loses their privacy because of robot’ programing that knows the controller’s background, analyzes his/her behavior, and understands its assigned controller from that behavior and background knowledge. Once an assignment is made, the robot is directed by a human that may or may not perfectly respond in the best interest of society. Action is exclusively directed by the robot’s human companion. A robot is unlikely to have intuition, empathy, or moral judgement in carrying out the direction of its assigned human partner. There is also the economic effect of lost human employment as a result of automation and the creation of robot’ partners and laborers.

A.I.s’ contribution to society is similar to the history of nuclear power, it will be constructively or destructively used by human beings. On balance, “Burn-In” concludes A.I. will mirror societies values. As has been noted in earlier book reviews, A.I. is a tool, not a controller of humanity.