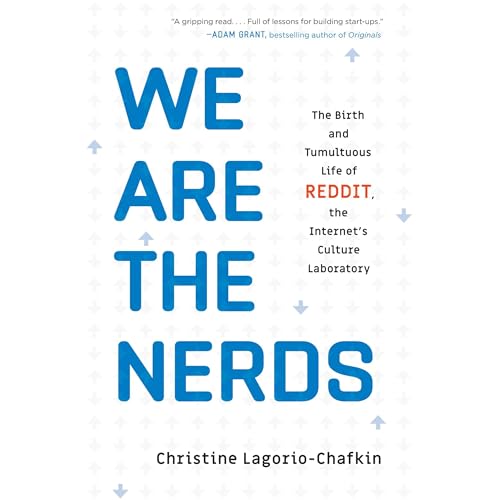

Books of Interest

Website: chetyarbrough.blog

WE ARE THE NERDS (The Birth and Tumultuous Life of Reddit, the Internet’s Culture Laboratory)

Author: Christine Lagorio-Chafkin

Narration by: Chloe Cannon

Christine Lagorio-Chafkin (Author, reporter, podcaster based in New York.)

Relistening to “We are the Nerds” may be reviewed from a perspective of the future of newspapers but that diminishes the tragedy of Aaron Schwarz’s suicide.

The original founders of what became known as Reddit were Steve Huffman and Alexis Ohanian, graduates from the University of Virginia. A third partner, Aaron Swartz, is invited into the company because of his tech experience in creating a company called Infogami which merged with Reddit. With the addition of Infogami, the original founders of Reddit created a parent organization called “Not a Bug, Inc”. Schwartz insists on being called a co-founder because of his contribution to Reddit as a programmer. That insistence rankled Huffman and Ohanian which grew into a resentment that fills the pages of the author’s story.

Steve Huffman on the left with Alexis Ohanian and his wife, Serena Williams, and their daughter on the right.

The author seems to minimize Schwartz’s contribution to Reddit despite the framework he created that made Reddit scale more quickly because of its open access and community-driven cultural impact. Swartz’s contributed code appears to have been an important step in the useability of Reddit by the public. However, in fairness to the original founders, the author infers that contribution pales in respect to the extensive coding and work done by Huffman. The point is that this conflict becomes an irritant that leads to the departure of Swartz from Reddit in 2007, after it was acquired by Condé Nast in 2006. That acquisition made all three original coders millionaires.

Swartz’s life and premature death is a tragic encomium to the story of Reddit’s success as a public forum.

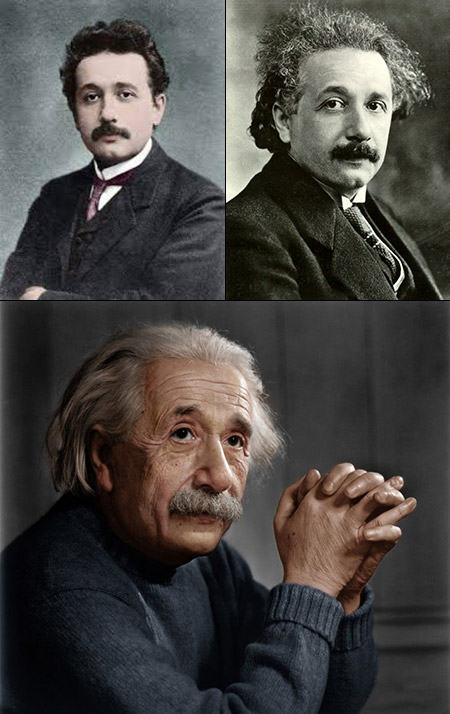

By some measure, Swartz is a brilliant human being, but his intelligence is accompanied by what might be characterized as a self-destructive personality. His ability as a computer nerd is evident in his High School days in Highland Park, Illinois. He goes on to Stanford, but its educational regimen leads him to leave after his first year. He preferred independent learning. Schwartz’s remarkable ability led him to become a research fellow at Harvard University in 2010. He became a self-taught intellectual with an activist belief in academic freedom that eventually led him to rebel against authority. He was arrested in 2011 for allegedly breaking into MIT’s computer network without authorization. He was charged for computer fraud and faced 34 years in prison and a million-dollar fine. At the age of 26, Swartz hung himself and died on January 11th, 2013.

An American mass media company founded in 1909.

Huffman and Ohanian believed Swartz’s contributions to Reddit were less than theirs in creating the company they sold to Condé Nast that made them millionaires. Swartz’s idealism and independence conflicted with the original founders of Reddit who seemed more interested in building a public platform that could make them rich. Though Ohanian believed they sold too soon, all three agreed to Condé Nast’s final offer that made them millionaires.

In retrospect, Ohanian may have been right about the future value of Reddit. Condé Nast spun Reddit out to an independent subsidiary under Advance Publications where it became a 42-billion-dollar success by 2025. Today, Huffman’s net worth is estimated at $1.2 billion as a result of his Reddit shares. Though Ohanian may not have held on to his shares, his net worth is estimated at $150-$170 million. Not bad for two University of Virginia graduates. However, as Plato observed, “The greatest wealth is to live content with little”. Swartz’s life seems to have had little to do with desire for wealth.

“We Are the Nerds” is a story about “Nerdom” and the tragic loss of Aaron Swartz to his loving family and the world of coding.