Books of Interest

Website: chetyarbrough.blog

Rebooting AI (Building Artificial Intelligence We Can Trust)

By: Gary Marcus and Ernest Davis

Narrated By: Kaleo Griffith

These two academics explain much of the public’s misunderstanding of the current benefit and threat of Artificial Intelligence.

Marcus and Davis note that A.I. cannot read and does not think but only repeats what it is programmed to report.

They are not suggesting A.I. is useless but that its present capabilities are much more limited than what the public believes. In terms of product search and economic benefit to retailers, A.I. is a gold mine. But A.I.’s ability to safely move human beings in self-driving cars, free humanity from manual labor, or predict cures for the diseases of humanity are far into the future. A.I. is only a just-born baby.

Self-driving cars, robot servants, and cures for medical maladies remain works in process for Artificial Intelligence.

Marcus and Davis note A.I. usefulness remains fully dependent on human reasoning. It is a tool for recall of documented information and repetitive work. A.I. is not sentient or capable of reasoning based on the information in its memory. Because of a lack of reasoning capability, answers to questions are based on whatever information has been fed to an A.I. entity. It does not use reason to answer inquiry but only recites responses to questions from programmed information in its memory. If sources of programmed information are in conflict, the answers one receives from A.I. may be right, wrong, conflicted, or unresponsive. You can as easily get an answer from A.I. that is wrong as one that is right because it is only repeating what it has gathered from the past.

What Marcus and Davis show is how important it is that questions asked of Microsoft’s Copilot, ChatGPT, Watson, or some other A.I. platform be phrased carefully.

The value of A.I. is that it can help one recall pertinent information only if questions are precisely worded. This is a valuable supplement to human memory, but it is not a reasoned or infallible resource.

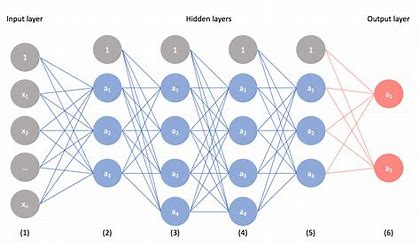

Marcus and Davis explain “Deep Learning” is not a substitute for human reasoning, but it is a supplement for more precise recorded information.

Even with multilayered neural networks, like deep learning which attempt to mimic human reasoning by patterning of raw data, can be wrong or confused. One is reminded of the Socratic belief of “I know something that I know nothing.” Truth is always hidden within a search for meaning, i.e., a gathering of information

The true potential of A.I. is in its continued consumption of all sources of information to respond to queries based on a comprehensive base of information. The idea of an A.I. that can read, hear, and collate all the information in the world is at once frightening and thrilling.

The risk is the loss of human freedom. The reward is the power of understanding. However, the authors explain there are many complications for A.I. to usefully capitalize on all the information in the world. Information has to be understood in the context of its contradictions, its ethical consequence, information bias, and the inherent unpredictability of human behavior. Even with knowledge of all information in the world, decisions based on A.I. do not ensure the future of humanity? Should humanity trust A.I. to recommend what is in the best interest of humanity based on past knowledge?

Markus and Davis argue A.I. is not, does not, and will not think.

A.I. will continue to grow as an immense gatherer of information. Will it ever think? Can, should, or will future prediction and political policy be based only on knowledge of the past?