Books of Interest

Website: chetyarbrough.blog

A Brief History of Artificial Intelligence (What It Is, Where We Are, and Where We Are Going)

By: Michael Wooldridge

Narrated By: Glen McCready

Michael Wooldridge (Author, British professor of Computer Science, Senior Research Fellow at Hertford College University of Oxford.)

Wooldridge served as the President of the International Joint Conference in Artificial Intelligence from 2015-17, and President of the European Association for AI from 2014-16. He received a number of A.I. related service awards in his career.

Alan Turing (1912-1954, Mathematician, computer scientist, cryptanalyst, philosopher, and theoretical biologist.)

Wooldridge’s history of A.I. begins with Alan Turing who has the honorific title of “father of theoretical computer science and artificial intelligence”. Turing is best known for breaking the German Enigma code in WWII with the development of an automatic computing engine. He went on to develop the Turing test that evaluated a machine’s ability to provide answers to questions that exhibited human-like behavior. Sadly, he is equally well known for being a publicly persecuted homosexual who committed suicide in 1954. He was 41 years old at the time of his death.

Wooldridge explains A.I. has had a roller-coaster history of highs and lows with new highs in this century.

Breaking the Enigma code is widely acknowledged as a game changer in WWII. Enigma’s code breaking shortened the war and provided strategic advantage to the Allied powers. However, Wooldridge notes computer utility declined in the 70s and 80s because applications relied on laborious programming rules that introduced biases, ethical concerns, and prediction errors. Expectations of A.I.’s predictability seemed exaggerated.

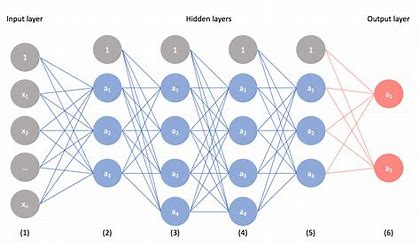

The idea of a neuronal connection system was thought of in 1943 by Warren McCulloch and Walter L Pitts.

In 1958, Frank Rosenblatt developed “Perception”, a program based on McCulloch and Pitt’s idea that made computers capable of learning. However, this was a cumbersome programming process that failed to give consistent results. After the 80s, machine learning became more usefully predictive with Geoffrey Hinton’s devel0pment of backpropagation, i.e., the use of an algorithm to check on programming errors with corrections that improved A.I. predictions. Hinton went on to develop a neural network in 1986 that worked like the synapse structure of the brain but with much fewer connections. A limited neural network for computers led to a capability for reading text and collating information.

Geoffrey Hinton (the “Godfather of AI” won the 2018 Turing Award.)

Then, in 2006 Hinton developed a Deep Belief Network that led to deep learning with a type of a generative neural network. Neural networks offered more connections that improved computer memory with image recognition, speech processing, and natural language understanding. In the 2000s, Google acquired a deep learning company that could crawl and index the internet. Fact-based decision-making, and the accumulation of data, paved the way for better A.I. utility and predictive capability.

Face recognition capability.

What seems lost in this history is the fact that all of these innovations were created by human cognition and creation.

Many highly educated and inventive people like Elon Musk, Stephen Hawking, Bill Gates, Geoffrey Hinton, and Yuval Harari believe the risks of AI are a threat to humanity. Musk calls AI a big existential threat and compares it to summoning a demon. Hawking felt Ai could evolve beyond human control. Gates expressed concern about job displacement that would have long-term negative consequences with ethical implications that would harm society. Hinton believed AI would outthink humans and pose unforeseen risks. Harari believed AI would manipulate human behavior and reshape global power structures and undermine governments.

All fears about AI have some basis for concern.

However, how good a job has society done throughout history without AI? AI is only a tool of human beings and will be misused by some leaders in the same way atom bombs, starvation, disease, climate, and other maladies have harmed the sentient world. AI is more of an opportunity than threat to society.